AI-based systems calls for a far more in-depth discovery phase that focuses on understanding the data the model uses as input and output, along with the processes engineers followed for training, tuning, and testing the model. Rather than relying on a checklist of “Do we have X control in place?”In this post, we’ll discuss why traditional threat modeling approaches may fall short for AI-based systems and introduce some key questions to help guide the threat modeling process of AI-based systems.

The approach is, in part, a reflection of what we’ve learned from our own threat modeling process and is aimed at sharing some of the insights and questions that helped us better understand and secure our AI and ML technologies. These questions cover crucial areas, including the model itself, its data, infrastructure, and safety considerations. While not exhaustive, these prompts are designed to help approach the threat modeling of AI systems with a more targeted perspective.

Why standard threat modeling falls short

Treating AI-based technologies as another piece of tech during the threat modeling process can overlook significant risks that could impact an organization. As machine learning (ML) and artificial intelligence (AI) become central to more applications, the excitement surrounding these technologies often leads organizations to focus on rapid deployment and experimentation. Yet, in the rush to innovate, it’s easy to ignore the security implications of launching ML systems.

Many AI-focused threat modeling tools (e.g., IriusRisk, PLOT4ai, and MITRE ATLAS), offer checklists of adversarial security threats such as poisoning or evasion, but stop short of fully analyzing the system they’re meant to protect. This approach can lead to blind spots, as organizations often rush to deploy or experiment with ML and generative AI without grasping the technology’s deeper security implications.

When security teams skip a thorough review of how their ML systems actually work, they risk adopting controls that don’t address real threats and create a false sense of security. Truly effective ML threat modeling demands a close look at each component. Only then can we begin discussing valid mitigations.

Threat modeling of AI based systems calls for a far more in-depth discovery phase that focuses on understanding the data the model uses as input and output, along with the processes engineers followed for training, tuning, and testing the model. Rather than relying on a checklist of “Do we have X control in place?”, threat modeling AI systems demands a thorough understanding of aspects unique to AI and ML development.

You want to understand:

How the model was built, trained, and tuned.

The data behind the model: inputs, outputs, training data, and test data.

Who the target audience is.

The infrastructure supporting the model.

Potential attack methods and attacker motivations.

How the model might cause harm or create risks in terms of safety.

Understand the machine learning model

Without a clear grasp of an AI system’s data flow, including how inputs are processed and outputs are used, we risk overlooking vulnerabilities that could cause significant harm. Understanding the design (inputs, outputs, and data flow) of AI-based systems is critical for identifying potential threats.

For instance, prompt injection in an LLM might seem harmless if it only affects what the model says to a user. But if the output updates a database, it could store malicious data, or if it triggers an API call, it might enable fraudulent actions or data leaks. These risks highlight why a thorough understanding of AI system design is essential in threat modeling.

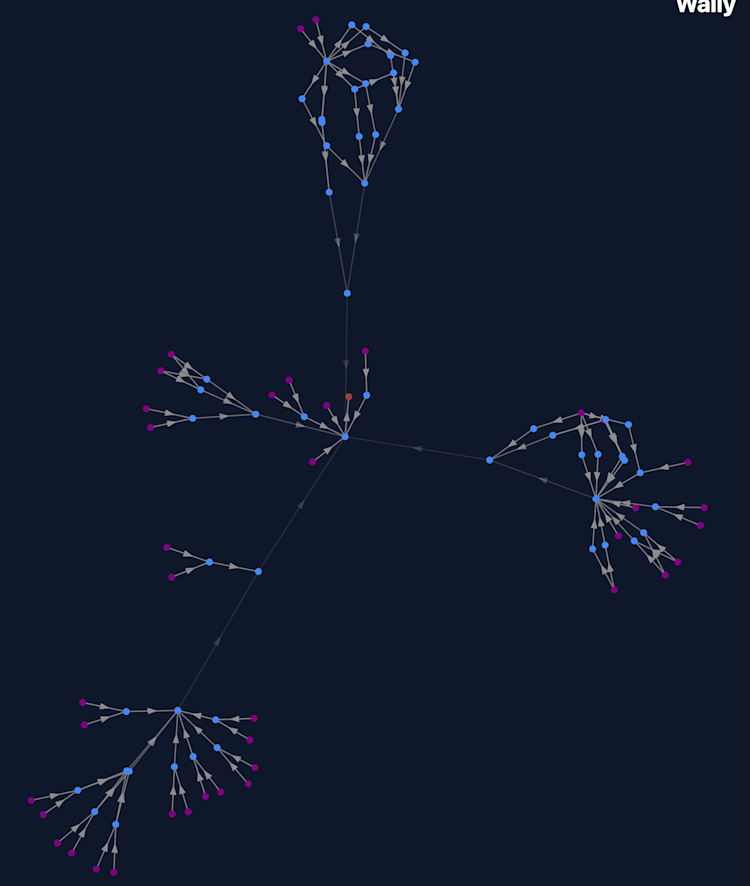

Part of our threat modeling process focused heavily on understanding the flow of data within our microservices. This involved examining how we collect and parse data for training our models, how we train and tune those models, and mapping how data flows and is processed from client requests to our cloud-hosted models. To achieve this, we leveraged reachability tools like Wally to map key data handling functions in our code, and conducted interviews with data scientists and engineers responsible for developing AI-based systems to better understand how those systems were architected.

Figure 1. Wally graph output (with function names removed) showing call paths to functions calling Gemini

Our threat model prioritized AI-based systems we have been working on for use by our customers, including:

Our Session Summaries feature, which provides concise text descriptions of end-user sessions

AI-based Suggested Elements, which helps Fullstory users govern their data taglessly

Additional ML-based functionality features yet to be announced.

Understanding a model’s architecture and training process is essential to begin assessing its vulnerabilities.

Questions: Decipher the AI model's core

To effectively assess vulnerabilities, some foundational topics you should cover and document include:

Purpose and audience:

What problem does the model solve, and who is it designed for?

Model type:

Is it a neural network, a classical ML model, or a large language model (LLM)?

Training process:

Was it built from scratch or adapted using transfer learning from existing pretrained models?

How was it trained, tuned, and tested?

Monitoring:

What happens if the model starts “drifting” (losing accuracy over time)?

Is it retrained, flagged, or retired?

How often will it be tuned or retrained?

Are there mechanisms to detect if the model starts relying too heavily on certain features for predictions?

Dependencies:

What libraries or external systems does it rely on?

Additionally, an important point to remember when threat modeling systems that rely on GenAI and machine learning models is that the risks they pose to the company developing them may differ from those affecting the intended users.

You might need to consider threats and vulnerabilities impacting:

The company developing the models (e.g., Google, which develops Gemini).

The owner of the model, which in many cases can be the same as the developer. Model ownership defines the responsible parties for biases and model failures.

The companies paying to use these models (our customers).

And the end users who are ultimately affected by the model’s decisions and predictions (the users of our customers).

Note: For a deeper understanding of responsible and affected parties when evaluating the impact of ML model failures, the paper Who is Responsible for AI Failures? provides valuable insights into how accountability should be considered across stakeholders.

Understanding the responsibility model was key to our threat modeling process, as it provides necessary context for evaluating architectural decisions and potential vulnerabilities. In our case, our current AI features available to the public rely on a set of AI-based systems built by Google (such as Gemini). At no point is customer data used to train third-party LLMs (such as Gemini) which features like Session Summaries or Suggested Elements depend on.

It's all about data

Failing to work with AI-ready data—or fully understand the nature of that data—can lead to privacy leaks, hidden biases, and regulatory pitfalls that undermine both user trust and organizational credibility. This is especially true when it comes to AI security. If you’ve ever been through an ML tutorial, you know that data is everything. Understanding the details of what data is used for training and inference, where it originates, and how it’s handled is essential. By examining data carefully, AI teams can enhance their systems’ security and strengthen user trust.

Questions: Examine AI's data landscape

Here are some specific questions we should be asking when it comes to ML:

Training, validation, tuning, and testing data:

What data powers the model, and where does it come from?

How were the training and validation sets constructed?

Do the features include protected characteristics?

Note: This analysis helps determine not only the sensitivity of the data but also whether the model might develop biases toward certain features, potentially impacting specific groups unfairly, such as women and people of color (see "correlated features" as an example).

Data capture mechanism:

How is the data used for training collected? For instance, casting a wide net during data collection could introduce risks of data leakage.

Note: In the ML context, "data leakage" can mean something different from what we typically consider in security. In ML, it refers to situations where aspects of the training data unintentionally influence the model during training and inference in ways that may distort results.

Input and output dynamics:

What are the inputs the model receives in production, and what does it output?

Is the input user-generated, or does it come from other parts of the system, such as a microservice?

Sources and features:

What features does the model rely on, and where are they drawn from?

Does the model receive these features directly from users during inference, or does it pull data from other sources?

How would changes to features—during both training and inference—impact the model’s safety and security?

Value of data to attackers:

What could attackers gain from accessing the training data?

Beyond PII, does the data include features that might allow the model to infer sensitive attributes, such as gender, race, or other protected characteristics? Sensitive or proprietary data can make the model itself an attractive target.

For instance, during our threat model process, we carefully examined the various features used for training all of our models to assess both the feasibility and potential impact of adversarial techniques like data inversion attacks.

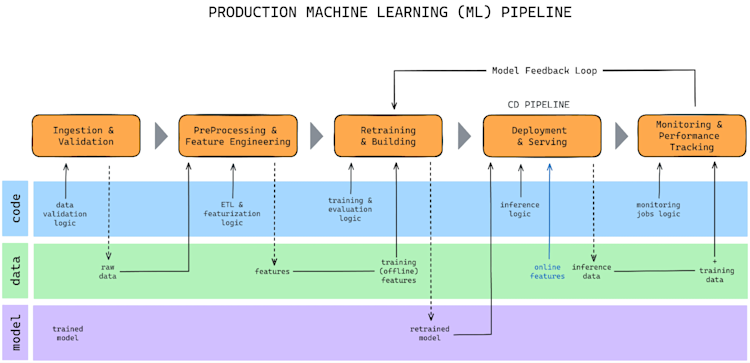

Figure 2. Threat modeling of AI based systems should consider the entire ML development pipeline. Image credits from https://www.qwak.com/post/end-to-end-machine-learning-pipeline

By considering data at each stage, from initial training to final inference, we can identify vulnerabilities that might otherwise go unnoticed.

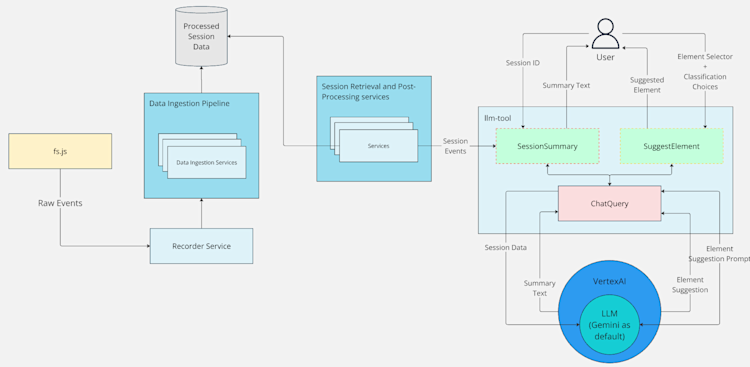

At Fullstory, we use NIST’s data-centric guide in SP 800-154 to frame our AI threat modeling, aligning with our focus on how data transformation, storage, and usage affect security. We began by creating a detailed graph that tracks data flow from user input to the models supporting our AI-based features. While we can’t share that internal graph, we’ve created simplified diagrams to help our support teams and customers understand these features at a high level. For example, the diagram below offers a concise view of data flow for our Session Summaries and Suggested Elements features.

Figure 3. High-level data flow for our Session Summaries and Suggested Element Features

Deployment and infrastructure

Securing the infrastructure behind AI-based systems requires more than just applying conventional security practices. Attackers who gain access to the model (whether during training, testing, or deployment) can inject malicious code, poison the model, or exfiltrate sensitive data. This is why treating an AI system like any other application is risky: its added complexity introduces new threats.

For instance, if an attacker compromises a Jupyter notebook in the cloud or backdoors a library dependency, they might hijack the entire AI stack.

Questions: Secure AI infrastructure

Questions about where a model is trained, tested, deployed, and accessed, as well as the type of infrastructure it relies on (cloud, edge, on-prem, or even developer laptops), reveal a lot about where vulnerabilities might lie:

Deployment and access:

How are models deployed and made available for inference?

Are they securely isolated?

Where are they trained?

Infrastructure dependencies:

What resources, databases, or services does the model rely on?

Are there cloud notebooks containing the model’s code?

Does the model or its supporting API rely on databases to pull or enrich inference data?

If an attacker backdoored the model through insecure dependencies or the use of insecure functions (e.g., pickle), what would they gain access to?

A recent article on Permiso’s blog demonstrates the importance of securing AI infrastructure, detailing how hosted models can be exploited when infrastructure security is overlooked.

Security of resources:

Are there adequate safeguards for sensitive data, and are access controls in place?

What security controls are implemented?

ML infrastructure is inherently complex, requiring a detailed examination of how all components, from servers to APIs, interact and are deployed. In our threat modeling work, we ensured that our AI systems were deployed using the same secure pipelines we use for code. We also inventoried all infrastructure resources in Google Cloud Platform (GCP) and confirmed that best practices were followed for configurations. Wiz, our Cloud Security Posture Management (CSPM) tool, played an essential role by providing AI security monitoring. It lists all AI resources in our cloud environment and flags misconfigurations. Additionally, we set up new rules to alert us when AI-related resources are created. for a couple of seconds.

Think outside the box

Treating AI security like ordinary application security can blind you to machine learning–specific threats. Hacking is about making a system do what it wasn’t designed to, so focusing on these unintended behaviors is more valuable than fixating on known attacks.

In a field as fast-moving as AI, it’s easy to get lost in buzzwords like “evasion” or “poisoning” without understanding the attacker’s true objectives. Ultimately, it’s not just about “breaking” the model; it’s about recognizing how adversaries might repurpose its capabilities for goals it was never meant to serve.

Questions: Probe unintended AI behaviors

Instead of just looking for bugs, effective AI security means anticipating how adversaries might force your AI to act in ways it wasn't designed to. This involves scrutinizing both how inputs could be manipulated and how outputs could be exploited for malicious ends, prompting several critical questions:

Misclassification:

What’s the impact if the model misclassifies an input? For example, could an attacker manipulate a recommendation engine to suggest inappropriate content?

Desired outputs:

What happens if the model produces an output that aligns with an attacker’s goals?

This could range from generating biased text or promoting specific products to illegitimately providing coupons and discounts.

Reliability:

If the model stops making accurate predictions, who gets hurt?

Is it just a minor inconvenience, or does it impact business operations?

Confidentiality:

Could the model reveal sensitive data when given certain inputs?

What value could an attacker gain by inferring training data from the model’s responses?

What would an attacker gain if they were able to steal the model, and how would that impact the model owner and its users (refer to ProofPoint evasion incident as an example of model extraction attacks)?

A framework we’ve found particularly useful is Microsoft’s guide for Threat Modeling AI/ML Systems and Dependencies. While it highlights specific types of attacks, many of the questions are generalized to help reviewers understand the model’s capabilities and how a threat actor’s goals might lead them to, for instance, cause the model to return random classifications versus targeted ones.

As attackers experiment with new techniques, it’s crucial for security professionals to broaden their focus and think about a wider range of potential risks. Keep in mind that the field of adversarial attacks is still young, and many methods remain theoretical. By focusing on potential outcomes rather than specific attack types, we keep our threat modeling adaptive and forward-looking. At the same time, it’s important to stay informed by subscribing to newsletters like Clint Gibler’s TL;DR sec, checking blog posts such as SecuringAI, Trail of Bits Blog, and reading academic papers.

Consider safety and ethical implications in AI

Harm mitigation should be a primary focus in threat modeling, especially for AI systems that directly interact with user data. And just because a model’s purpose sounds simple doesn’t mean we should overlook its potential impact on users’ lives.

For example, our Session Summaries feature offers a concise overview of a user’s activity, eliminating the need to watch the entire session. While threat modeling this feature, we examined the prompts and configurations of our GCP-hosted LLMs (through Vertex AI) to ensure that no protected characteristics or biases are introduced when crafting these summaries.

Similarly, for our other ML work, we carefully reviewed the model training features to prevent any protected characteristics from being included.

Questions: Mitigate harm and bias

Here are some key considerations we considered in our own process:

Harm and bias:

Could the model’s predictions harm certain groups?

Over time, biases may emerge, impacting people based on gender, race, or ethnicity. These biases can lead to safety concerns, from physical harm to psychological harm, especially if specific populations are unfairly targeted.

Unintended consequences:

Even if the model’s purpose seems benign, consider potential ripple effects. For instance, an LLM might generate biased responses or misinformation if not carefully controlled, or it may use language offensive to certain groups. Large language models deployed via systems like Vertex AI allow you to set safety filters to reduce the chances of unintended, harmful responses.

In the world of AI, safety goes beyond traditional cybersecurity; it’s about ensuring the system doesn’t unintentionally harm users. In her paper titled Toward Comprehensive Risk Assessments and Assurance of AI-Based Systems, Heidy Khlaaf (2023) noted, “safety must center on the lack of harm to others that may arise due to the intent itself, or failures arising in an attempt to meet said intent.” This paper is worth a read, as Khlaaf proposes a systematic approach to evaluating a system based on the harms it may cause to “protected characteristics” such as age, gender, language, class, or caste that is necessary when reviewing the safety of AI-based systems.

Wrapping up

Threat modeling for ML and AI systems is as much an art as it is a science. A checklist-based approach might work for some applications, but with ML, we need a more nuanced perspective. By focusing on data, model dynamics, infrastructure, potential adversarial motives, and safety concerns, we can build comprehensive threat models that genuinely capture the unique risks these systems bring.

At Fullstory, our approach to securing ML and AI systems reflects this commitment. We understand that robust security involves not only protecting data and algorithms but also anticipating how these systems might evolve—and how attackers might exploit them. Threat modeling is more than just a box to check; it’s an ongoing process of understanding and adaptation, ensuring we’re prepared for whatever comes next.