Written by Ishan Chattopadhyaya, Apache Solr committer & PMC Member (@ichattopadhyaya) and Jaime Yap, Director of Engineering at Fullstory (@jaimeyap)

Last year, we shared our experiences migrating our primary analytics database from N1 to N2D instances based on second-generation AMD EPYC™ Rome processors in Google Cloud. We saw huge real-world improvements to both long-tail latency, as well as overall system stability. So when we heard that Google was beta testing new VM instances based on third-generation AMD EPYC™ Milan, we were understandably excited to get our hands on them to try them out!

By participating in the N2D-Milan beta, we were able to run a suite of database benchmarks using an open source solr specific benchmarking framework we built called Solr Bench. Read on to learn more about our testing methodology and how you can try things out for yourself.

Test Setup and Methodology

Our core Search and analytics infrastructure is based upon the hugely popular open source Apache Lucene and Solr projects. We developed and open sourced a benchmarking framework (Solr Bench) to make it super easy (and repeatable) to spin up a multi-node Solr cluster, index a custom dataset, and run custom queries against it—all while controlling for VM JIT warmup, different instance sizes and OS images, JDK versions, GC algorithms and tracking CPU, query time, RAM usage and other metrics. The suite produces a simple report at the end with the benchmark results.

The Setup

While our harness is capable of setting up a multi-node Solr cluster, we decided to keep things simple this time and compare single nodes. Each node was configured as an N2D-Standard-4 (16 CPUs, 32GB Ram) VM with network attached PD SSDs. We tested a slightly modified fork of Solr 8, running on OpenJDK 11.0.10 on Debian 10 with the JVM heap sizes set to 24GB.

N2D-Rome instances were standard N2D instances, while the N2D-Milan instances were established by setting the minCPUPlatform as “AMD Milan” within the terraform GCP config.

We ran two separate tests: one using some internal custom datasets and proprietary query workloads, and a second using an openly available dataset from Kaggle with some generated filter, facet and join queries (that we are releasing as open source so you can try them out for yourself). Both tests used a single collection with a single shard and replica and included some queries reserved for JIT warmup.

Results

Over the course of a day, there was some variance in the performance of the N2D-Rome instances across runs—sometimes by as much as 5%. The N2D-Milan instances, however, showed very consistent results on every run. While we aren’t certain, we believe this is due to their being in limited Beta preview and not subject to noisy neighbor vCPU issues like N2D-Rome. With those qualifiers out of the way, the trends were consistent: N2D-Milan consistently outperformed N2D-Rome. Sometimes by quite a bit.

Internal Workload Results

Our internal dataset test had ~6 million synthetic documents, with several dozen fields per document. The queries were generated from an internal tool simulating a range of loads designed to stress test the database. This test used a slightly modified version of Solr with proprietary indexing and query code relevant to Fullstory analytics (deployed via Solr Plugin). This made heavy use of multi-document joins, nested facets, and other CPU intensive query patterns.

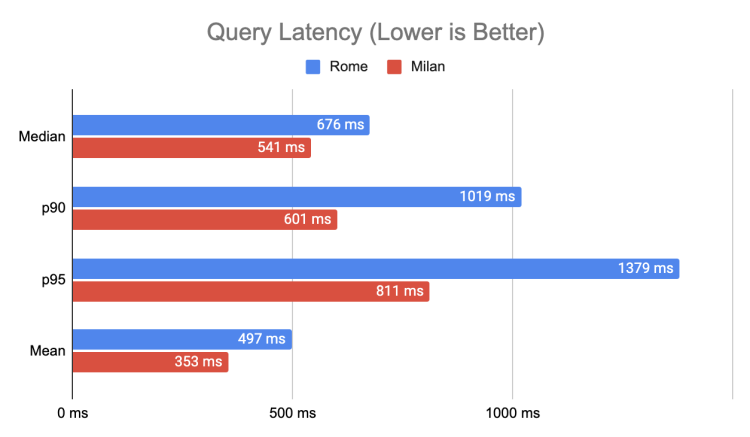

Compared to N2D-Rome instances, N2D-Milan instances were 28.9% faster on average for Query latency, with a median latency improvement of 19.9%. We also observed Indexing speedups (not shown on the chart) of ~10.6% on average.

Open Workload Results

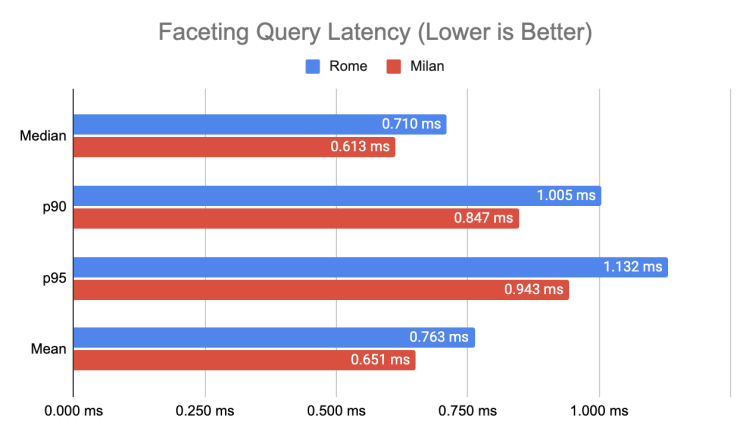

Posting benchmarks of proprietary, non-open source workloads isn’t nearly as satisfying as publishing results you can try to replicate yourself. So, we created some more benchmark configurations using a publicly available kaggle dataset consisting of ecommerce user behaviour events and standard, non-proprietary Solr/Lucene queries. We created two different query workloads. One focusing on filtering and facets, and another focusing on joins. We generated queries at different time offsets to avoid internal caching.

Here, we see more modest performance improvements, with p95 latencies improving by about 16.7% and average latency improving by about 14.6%. Indexing performance (not shown on the chart) showed about a 4.6% improvement on average. It is worth noting that this data set is very simple, having only nine basic columns.

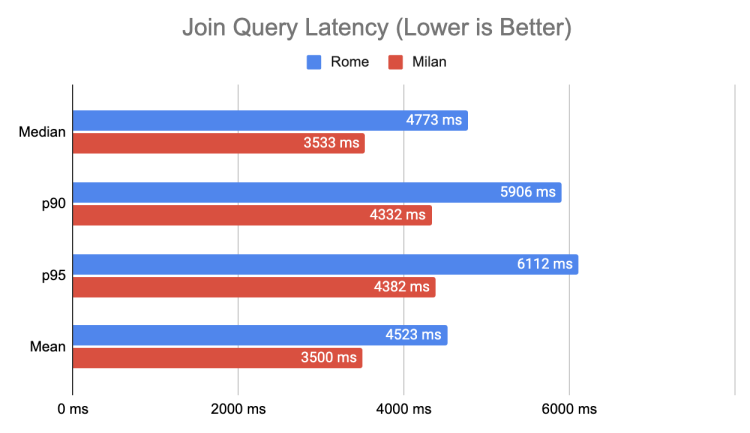

Our join query results showed a larger performance delta than the facet queries, with p95 latencies improving by about 28.3% and average latency improving by about 22.6%. The join queries are individually far more compute intensive than the individual facet queries. It is interesting to observe more performance gains for the more expensive workloads.

Conclusions

Each benchmark result is going to be highly workload dependent, and our tests reflect that. We saw larger speedups with heavier query workloads, and saw the largest speedups in our own proprietary workloads. What this tells us is that for the same instance type—at the same price point—we could see ~29% query performance gains on average, with potentially larger gains for our p95 and p99 queries. These are really impressive generational gains, and we expect this to translate to dramatically improved utilization and better experiences for our customers. We can’t wait to get these instances deployed across our infrastructure in the coming months.

And If you’re interested in replicating some of the results in this blog post yourself, feel free to try out Solr Bench for yourself!