If you want to be better at business—any business—it’s all about data. Decisions aren’t made by hunches, they’re made by statistical proof.

Data doesn’t always tell us the exact way to go in every instance. Sometimes, we have to feel our way by running small tests with pinpoint hypotheses. One way to do that is A/B testing.

A/B testing is all about studying causality by creating two near-identical items and then seeing the effects of how a single change can make a difference.

What is A/B testing?

A/B testing refers to an experimentation technique where two or more versions of a variable are created to determine which version reveals the maximum impact.

The goal—to drive your key performance metrics.

Often a part of a conversion rate optimization (CRO) strategy, teams conduct A/B tests in order to gather qualitative and quantitative data to improve actions across the customer journey.

Even the simplest changes—for instance, the color of a button—can impact conversion rates.

How does A/B testing work?

The basic A/B testing process looks like this:

Make a hypothesis about one or two changes you think will improve a conversion rate.

Create a variation of that with one (and only one) change per variation.

Divide incoming traffic equally between each variation and the original change.

Give it some time (depending on your value), then evaluate the results.

Let’s dig into the ways your team can utilize A/B testing with Digital Experience Intelligence (DXI).

Turn up the volume on your user behavior data.

See Fullstory’s behavioral data platform for yourself. Try Fullstory for free — for as long as you'd like.

5 ways teams use A/B testing with digital experience

1. Testing URLs against each other

A common A/B test conducted by many product teams involves building two near-identical pages with unique URLs or URL query parameters—and testing them side by side.

Let’s say you’d like to test a photo background image on a specific landing page compared to an illustration background image. Your team could then publish two nearly identical pages with one variance between them:

website.com/page1.html

website.com/page2.html

Depending on what you’re testing for—conversion rate, time on page, scroll depth, or another other metrics—teams can easily use a Digital Experience Intelligence platform to discern a winner.

2. Testing elements with Session Replay

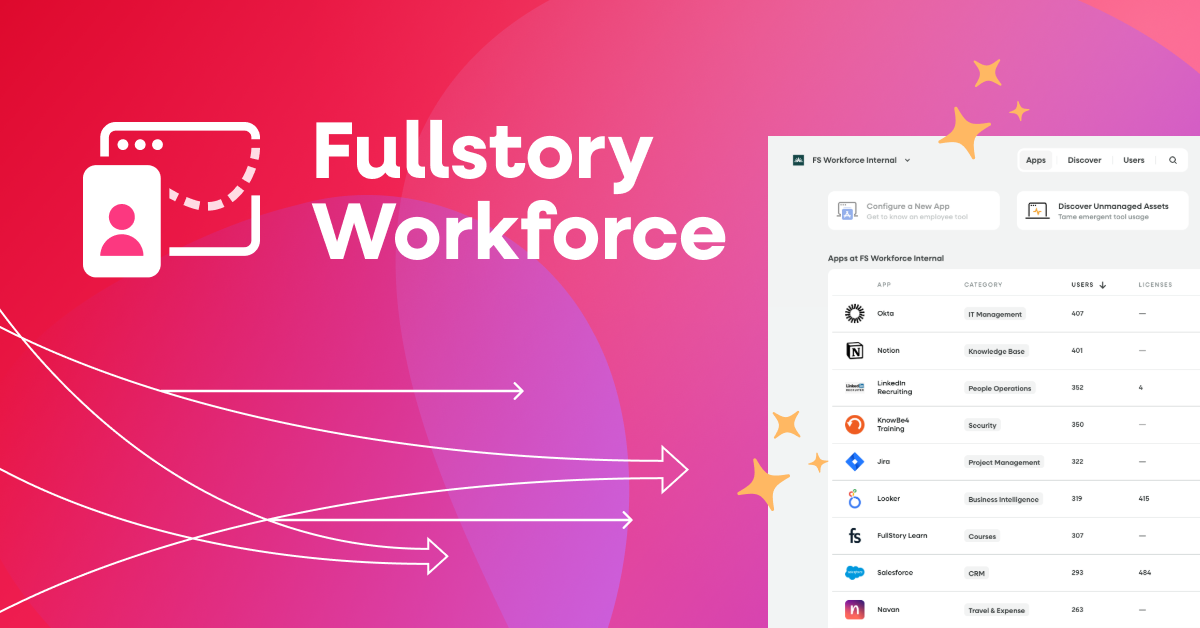

If you’re using a DXI tool such as Fullstory, it’s simple to use Session Replay to test elements.

Session Replay shows a complete picture of the user on a website or app. It transforms logged user events, such as mouse clicks, scrolling, tapping—into a reproduction of what the user actually did.

In other words, session replay helps you see through your customer’s eyes, literally.

You can use Session Replay to A/B test critical page functionality, such as:

Which landing page version has a longer time-on-page?

Which CTA converts more?

Which featured image works better?

3. Watched elements

Without the right tools, A/B tests can often be limited to comparing “took an action” to “did not take an action”—things that can be easily quantified, such as click-through rate.

But with DXI, teams can even test what people did not do.

If you’re looking to A/B test users who saw (or did not see) a particular element, this approach via Watched Elements is particularly useful. By monitoring if an element rendered on a particular page, and if it was visible in the user’s viewport, it’s simple to test what you think users are seeing vs. what they actually are seeing.

4. Integrations and A/B testing

By themselves, DXI tools just scratch the surface of A/B testing capabilities. For greater power, pair the qualitative and quantitative data gathered through a DXI platform such as Fullstory with integrations with tools such as Optimizely.

Modern digital experience platforms are easily coupled with the integrations you need:

Qualtrics for survey metrics

Slack for cross-functional collaboration and messaging

JIRA for process and workflow management

And many more

5. Data Layer Observation rule

As detailed by our data layer archives, most websites and applications have a series of layers:

Presentation layer—what the website visitor sees, typically built with HTML and CSS.

Data layer—the layer for collecting and managing the data produced.

Application layer—all the tools connected to your website (Google Analytics, Facebook Pixel) which are typically JavaScript snippets.

Every website has a presentation layer, and most sites have an application layer, but not every site has a data layer to collect data. When you rely on the presentation layer to collect data, any change you make to your site will impact the data you collect. Small design changes can mean big data problems.

With DXI, you can write a Data Layer Observer (DLO) rule and deploy it with a snippet to have the A/B tests come into Fullstory. With the DLO you can get a script to add to your site that brings data layer variables into Fullstory. All you need to do is add “rules” that decide what variables will be observed. This provides you with a ready-to-use rule for A/B testing.

DXI: The right tool for the job

We all know that A/B testing can expedite processes involved with optimization—but the right tool for the job is key.

With these examples of how a DXI solution can help you significantly improve your website’s UX by eliminating weak links and finding the most optimized version of your website—you’re on the right path for A/B testing success.

It’s time for better user feedback.

Elevate your Voice of Customer data with the ability to see user experiences as they happen. Request your Fullstory demo today.