What is A/B testing?

A/B testing, also called split or bucket testing, involves presenting two versions (A as the control and B as the variant) to comparable visitors or viewers simultaneously to see which one improves your chosen metric.

How does A/B testing work?

A/B testing works by comparing two versions of content to determine which one performs better. One version (A) is the control, while the other (B) includes a change you want to test. Users are randomly assigned to either version to reduce the risk of bias and ensure that differences in performance are due to the change being tested—not external factors.

The test runs for a set period to gather enough data for statistically significant results. Once complete, teams analyze metrics such as conversion rates, clicks, or purchases to determine which version performed better. These insights help inform decisions on which changes to implement for optimization

6 key benefits of A/B testing

When there are problems in your conversion funnel, you can use A/B testing to pinpoint the cause. Some of the most common conversion funnel leaks include:

Confusing call-to-action buttons

Poorly qualified leads

Complicated page layouts

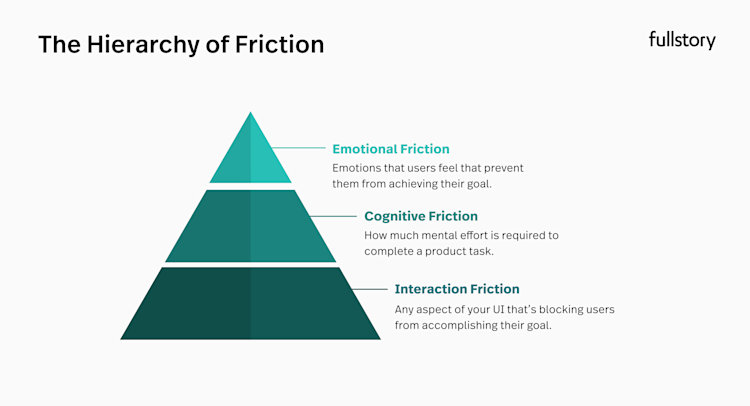

Too much friction leads to form abandonment on high-value pages

Bugs or frustration points during the checkout process

With A/B testing, you can target these areas by experimenting with different landing pages, CTAs, and layouts to pinpoint exactly where users are dropping off.

1. Solve visitor pain points

When visitors come to your website or application, they usually have a goal in mind. They might want to:

Learn more about a deal or special offer

Explore products or services

Make a purchase

Read or watch content about a particular subject

Even casual browsing counts as a goal—users may simply want to explore and familiarize themselves with your offerings. Unfortunately, these goals can be hindered by roadblocks like confusing navigation, unclear copy, or broken CTAs that disrupt their experience.

Several tools can help you understand this visitor behavior and optimize user experiences. Fullstory, for example, offers heatmaps, funnel analysis, session replay, and other tools that reveal how users interact with your site. By analyzing this data, you can identify the source of user pain points and start fixing them.

2. Get better ROI from existing traffic

If your website or app attracts significant traffic, A/B testing can help you maximize ROI by improving conversion rates.

Instead of spending more money acquiring new visitors, A/B testing allows you to optimize your conversion funnel and identify which changes—such as improving CTAs, simplifying forms, or enhancing page layouts—will have the most significant impact on user experience and conversions.

This targeted approach often yields greater returns at a lower cost than continuously investing in new traffic acquisition.

3. Reduce bounce rate

Bounce rate measures how often visitors land on your site or app, view just one page, and then leave. This metric is calculated by dividing the number of single-page sessions by the number of user sessions on your website. While there are other ways to define bounce rate, they all point to the same thing: disengaged users.

A high bounce rate means visitors often encounter frustrating or confusing experiences on your website or mobile application that cause them to leave quickly. This is a great opportunity for A/B testing. By identifying high-bounce pages, you can test and tweak problem areas. A/B testing helps track the performance of different versions until you see an improvement.

Over time, these tests help uncover specific pain points and improve the overall user experience.

4. Make low-risk modifications

Making significant changes to your website or mobile application always comes with risk.

You could spend thousands of dollars on a full redesign for an underperforming campaign, but if those changes don't deliver results, you’ll lose time and money.

A better approach is to use A/B testing to make small, incremental changes instead of overhauling everything at once. This way, if a test fails, you’ve minimized the risk and cost while still gathering valuable insights to guide further improvements.

5. Achieve statistically significant results

A/B testing is only reliable when the results are statistically significant. This means the observed differences between variations are unlikely to be due to chance. Statistical significance confirms that the results reflect real user behavior, making them actionable for decision-making.

A 95% significance level is ideal for most tests. Some teams may target 90% to reduce the sample size and speed up results, but this increases the risk of inaccurate findings.

Several factors can prevent you from reaching statistical significance, including:

Not enough time to run tests

Pages with very low traffic

Changes that are too small to produce meaningful results

To achieve statistical significance quickly, you can test on high-traffic pages or implement larger, more impactful changes.

6. Redesign websites to increase future business gains

A/B testing isn’t only for minor tweaks to your website or application. Even during a full redesign, this kind of testing can still be valuable. For example, you could create two versions of your site and measure the results after receiving a statistically significant number of visitors.

Even after the new site or application goes live, you should continue A/B testing. In fact, following a big launch is the perfect time to start refining and optimizing individual elements.

Common types of A/B testing

There’s no single right way to conduct A/B testing. Different methods are suited to different goals and use cases, and you should tailor your approach based on your desired outcomes. That being said, there are 3 common types of testing:

1. Standard A/B Testing

Standard A/B testing compares two versions of the same page or element, with users randomly assigned to variation A (control) or variation B. The goal is to measure which version drives better performance through statistically significant data.

For example, a retailer might test two versions of a product page—one with a more prominent "Add to Cart" button and another with a promotional badge—to see which version increases purchases.

2. Split URL Testing

Split URL testing is a variation of A/B testing where users are directed to different URLs instead of making changes to a single page. This kind of testing is helpful for major changes to design, structure, or backend functionality, such as page load optimizations or new workflows.

For example, a bank might use split URL testing to test a new version of its credit card application flow by directing some users to a completely redesigned application page hosted at a different URL.

3. Multipage Testing

Multipage testing tests a single change across multiple pages within a workflow or funnel. This can be useful when optimizing entire user journeys rather than individual pages.

For example, a restaurant chain could add customer testimonials to the menu, reservation, and contact pages of its website to see if it increases overall reservation completions.

How do you choose which type of test to run?

There are several factors to consider when deciding which tests to run for conversion rate optimization (CRO) testing. You should think of:

The number of changes you’ll be making

How many pages are involved

The amount of traffic required to get a statistically significant result

The size and impact of the problem you're trying to solve

Simple changes can often be effectively tested with standard A/B testing. More extensive changes involving multiple pages or total design overhauls may call for approaches like split URL or multipage testing.

How do you perform an A/B test?

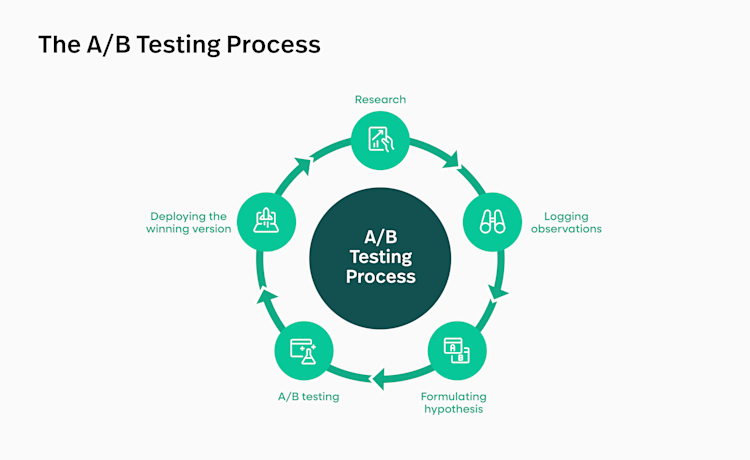

Following a structured process helps ensure your A/B test delivers reliable, actionable insights.

Step 1: Research

Before conducting tests or making changes, it's important to set a performance baseline. Collect both quantitative and qualitative data to learn how your website or application is performing in its current state.

The following elements represent quantitative data:

Bounce rate

Video views

Traffic

Subscriptions

Average items added to the cart

Purchases

Downloads

Much of this information can be collected through a behavioral data platform like Fullstory.

Qualitative data, on the other hand, focuses on the user experience and is typically collected through polls, surveys, or interviews. When these two types of data are used together, they provide a more complete picture of user behavior.

Step 2: Observe and formulate a hypothesis

At this stage, you analyze your data and write down your observations. This helps you develop a hypothesis that will eventually lead to more conversions. In essence, A/B testing is hypothesis testing.

Step 3: Create variations

A variation is a new version of a page that includes the changes you want to test. A good rule of thumb is to optimize areas where users tend to drop off, such as sign-up forms or checkout pages.

Try to keep your test focused—changing too many elements at once can make it difficult to determine which change drove the result.

Step 4: Client-side vs. server-side A/B testing

Before running your test, you'll need to decide whether to deliver the variations to users through client-side or server-side testing.

With client-side testing, JavaScript runs in the user’s browser to adjust what they see based on the variation assigned. This method works best for front-end changes like fonts, color schemes, layouts, and copy. For example, if you’re testing the wording or color of a CTA button, client-side testing is ideal.

Server-side testing happens before the page is delivered to the visitor. It's more robust and suited for backend or structural changes, such as improving page load time or testing different workflows. This method allows you to test elements that affect performance and engagement, like speeding up checkout steps or altering database queries to reduce response time.

Step 5: Run the test

With variations and a testing method selected, it’s time to run your test. The length of the test depends on how much traffic you receive and the level of statistical significance you want to achieve. Higher significance provides more reliable insights but requires more time and traffic.

While the test is running, monitor performance and watch for potential technical issues. Additionally, try to avoid making changes mid-test, as this can compromise the accuracy of your results.

Step 6: Analyze results and deploy the changes

Once the test is complete, review the results and draw conclusions. If the data shows that one version clearly outperformed the other, you can deploy the winning change. However, not all tests produce conclusive results. In those cases, you may need to test additional changes or refine your hypothesis to gather further insights.

A/B testing is an iterative process. After one test, you might move on to other pages or stages of the customer journey. By continuously testing and optimizing different pages, elements, and features, you can improve the user experience and drive higher conversions over time.

How do you interpret the results of an A/B test?

A/B test results are measured by key performance indicators (KPIs), which reflect the actions you want users to take. These success indicators might include clicks, views, sign-ups, purchases, or downloads, depending on your goals.

After completing your test, you’ll evaluate whether one variation performed significantly better than the other based on statistical significance and margin of error.

Frequentist vs. Bayesian approaches

There are two common ways to interpret A/B testing results: frequentist and Bayesian.

Frequentist approach: This method assumes there is no difference between variations at the start (called the null hypothesis). Once the test is complete, you’ll calculate a p-value, which indicates the likelihood that any difference between variations is due to chance. A low p-value means the results are statistically significant. However, frequentist tests require the full test to run before results can be finalized.

Bayesian approach: Bayesian testing uses prior data and new evidence from the current test to update predictions. This method allows you to review results mid-test and call a winner early if one variation clearly outperforms the other.

The top A/B testing tools to use

Several tools are available to help businesses set up, execute, and track A/B tests.

The best web and app A/B testing tools

Optimizely

Optimizely is a platform designed for conversion optimization through A/B testing. It allows teams to set up tests on website changes and collect data from real user interactions. Users are routed to different variations, and their behavior is tracked to determine which version performs better. In addition to A/B testing, Optimizely supports multipage and other types of testing to optimize various aspects of the user experience.

AB Tasty

AB Tasty facilitates A/B and multivariate testing, allowing testers to choose between client-side, server-side, or full-stack testing options. The platform also includes features like a Bayesian Statistics Engine to track and analyze results.

Both Optimizely and AB Tasty integrate seamlessly with Fullstory, so you can track user interactions with different test variations.

VWO

VWO is another major player in A/B testing and experimentation software. Like Optimizely and AB Tasty, they offer web, mobile app, server-side, and client-side experimentation, as well as tools for personalizing user experiences.

Email A/B testing tools

Moosend

Moosend helps marketers create and manage email campaigns, including A/B split testing. This feature allows teams to test different variations of marketing emails, measure user response, and identify the best-performing version.

Aweber

Aweber offers split testing for up to three email variations. Users can test elements like the subject line, preview text, message content, and send times. It also supports audience segmentation and allows testers to compare entirely different emails.

MailChimp

MailChimp allows users to A/B test email subject lines, sender names, content, and send times. Users can create multiple variations and control how recipients are split among them. Testers can define the conversion action and duration to determine the winning variation, such as tracking open rates over an eight-hour period.

Constant Contact

Constant Contact offers automated A/B testing for email subject lines. Once the test is complete, the tool automatically sends the winning subject line to the remaining recipients, helping users optimize engagement with minimal effort.

A/B testing and CRO services and agencies

Some companies have the infrastructure and bandwidth to run their own experimentation programs, but others might not. Fortunately, there are services and agencies that can help drive your A/B testing and CRO efforts.

Conversion

Based in the UK, Conversion is one of the world's largest CRO agencies. It works with brands like Microsoft, Facebook, and Google.

Lean Convert

Also based in the UK, Lean Convert is one of the leading experimentation and CRO agencies.

Prismfly

Prismfly is an agency that specializes in ecommerce CRO, UX/UI design, and Shopify Plus development.

Create a data-driven culture with A/B testing

To unlock the full potential of A/B testing, businesses must go beyond isolated experiments and foster a culture of continuous data-driven decision-making.

Here are some tips to help you get started.

Encourage curiosity and experimentation

Teams should feel empowered to ask questions like, "What would happen if we changed this headline?" or "Could a simpler form design improve conversions?" By supporting experimentation at every level, you create an environment where ideas are tested and backed by evidence—not assumptions.

Break down silos

A/B testing is most effective when cross-functional teams, including those from product, marketing, design, and engineering, collaborate.

For example, product teams can optimize onboarding workflows, while marketing can test landing page copy. Sharing insights across departments prevents duplicated efforts and accelerates innovation.

Measure success and share wins

Celebrate and share testing insights across the organization. By communicating wins and lessons learned, you reinforce the importance of testing and encourage other teams to get involved. Over time, this helps establish a sustainable culture of optimization.

Further reading: Struggling to unlock the full potential of your data in your organization? Our 6 Hurdles of Modern Data Teams guide will help you address the biggest data-related challenges.

Drive data-driven success with Fullstory

A/B testing is an effective way to improve user experience and drive business growth through continuous optimization. Whether you're tweaking a landing page or conducting a full redesign, testing helps minimize risk, uncover visitor pain points, and maximize your existing traffic's potential.

Fullstory helps you take testing further by giving you the behavioral insights you need to create better experiences. Want to see how Fullstory can help your business? Get a demo today.